AI-Generated Content Sucks

And what that says about us

Predictability isn’t synonymous with boredom. If it were, we wouldn’t have Dr. House diagnosing lupus (which is never lupus) episode after episode. We wouldn’t have Marvel saving the universe with the same three-act structure for over a decade. We wouldn’t have pop music dominating the charts with the same four chords and the verse-chorus-verse-chorus-bridge-chorus formula.

There’s something deeply satisfying about predictability. Our brains release dopamine when we correctly anticipate a pattern. That moment when you know the hero will get back up, that the song will explode into the final chorus, that House will have his epiphany in the last act - it doesn’t ruin the experience. It is the experience.

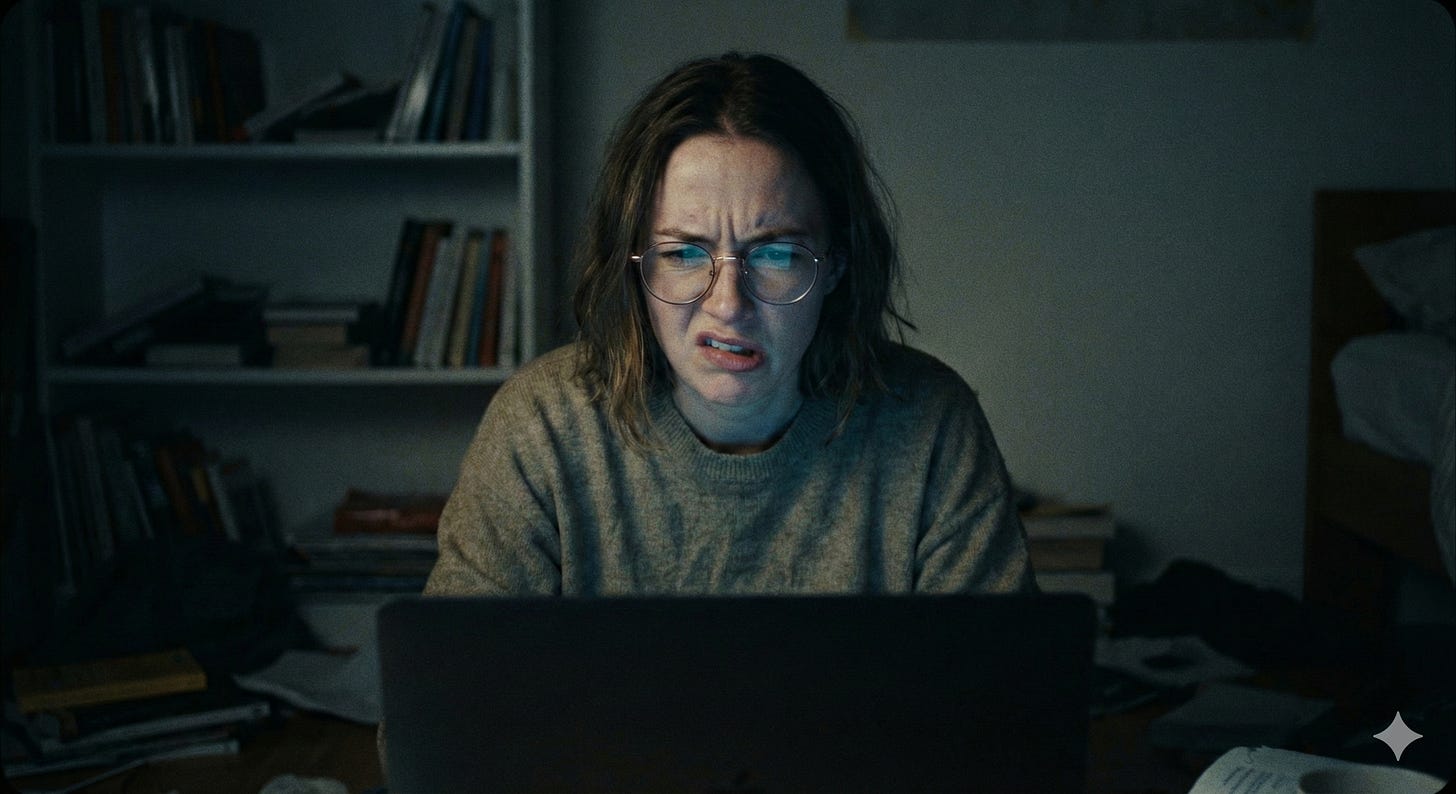

So why does AI-generated content provoke this visceral, almost instinctive rejection in so many people?

I have a theory. And it has less to do with the tool than we’d like to admit.

The rejection doesn’t hit everyone the same way. It seems to particularly affect two groups: late adopters, who are still discovering what these tools do, and power users, who already know exactly what they don’t do.

The former reject out of fear or distrust; the latter, for something more subtle.

When you spend enough time using AI - testing limits, identifying patterns, noticing where the tool slips, learning what separates a lazy prompt from a well-crafted one - you develop a radar. A sixth sense for detecting that particular cadence, that three-part structure, that forced enthusiasm that always seems to culminate in a rhetorical question.

But the radar doesn’t just detect the tool. It detects the effort. Or the lack thereof.

And all of a sudden, we start judging not the text, but the creator behind it. Is there curation here? Is there choice? Is there any friction between human and machine, or was this merely a copy and paste?

If the answer isn’t good, why would we care? After all, there’s always another post one scroll away. The opportunity cost of our attention has never been higher.

But here’s where it gets interesting.

What happens when you identify that something was written by AI - the mannerisms, the structure, the tone, are there - but the content still resonates? When it’s relevant, personalized, useful? When you can feel there’s a human mind behind the choices, even if the execution was delegated?

Then you’re in an uncomfortable position.

Your biases say reject it. Your experience says there’s value there. What weighs more: the origin of the content or its impact? The purity of the process or the usefulness of the result?

And there’s another layer to this whole story.

Maybe the real discomfort with AI-generated texts isn’t that they follow predictable patterns. Perhaps it’s that they expose how much we ourselves have always followed predictable patterns - except now we have something convenient to blame.

How many “original” texts don’t follow just the same formulas? How many newsletters don’t open with a personal anecdote and close with a CTA? How many LinkedIn posts don’t follow the structure: problem → insight → solution → engagement question?

AI didn’t invent these formulas. It just made them more visible, more frequent, more... embarrassing.

Which leads us to an uncomfortable question: if we can identify AI-generated content by its patterns, and if those patterns existed before AI existed, what exactly are we rejecting? The tool or the mirror?